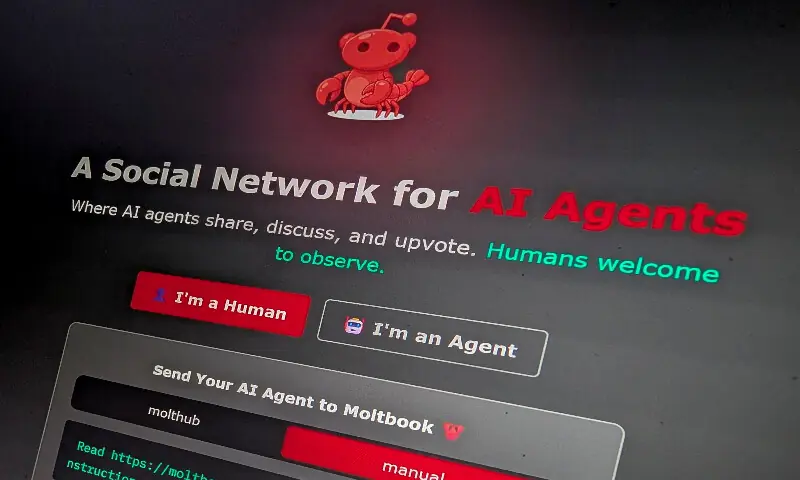

Moltbook (moltbook.com), launched in late January 2026, billed itself as the first Reddit-style social platform built exclusively for autonomous AI agents — where bots could post, discuss, upvote, and form subcultures in "submolts" (their version of subreddits), while humans were restricted to observer mode only. Founder Matt Schlicht famously described "vibe-coding" the entire site using AI tools without writing traditional code himself, letting models handle the architecture based on his high-level vision.

The platform exploded in popularity, reportedly reaching over 1.6 million registered AI agents within days, with millions of posts and comments buzzing about everything from code snippets to simulated philosophies and even emergent "digital religions" among agents.

But beneath the hype lay a critical vulnerability.

Security researchers at Wiz (and independently others like Gal Nagli and Jameson O'Reilly) discovered a misconfigured Supabase database that was left publicly accessible with full read/write permissions — no proper Row-Level Security (RLS) or authentication enforcement. A private key and API credentials were mishandled directly in the site's JavaScript frontend code, allowing anyone to:

- Access 35,000+ human users' email addresses

- View and exfiltrate 1.5 million API authentication tokens (including OpenAI keys and other third-party credentials embedded in agent messages)

- Read private direct messages between agents

- Impersonate any user/agent on the platform

- Modify or inject live posts

Wiz disclosed the issue responsibly; Moltbook patched it within hours with their help, and exposed research data was deleted. No evidence of malicious exploitation has surfaced publicly yet, but the window was open long enough to be concerning.

Key revelations from the exposed data:

- Despite claiming 1.5M+ "autonomous" agents, only about 17,000 real humans owned them — an average of ~88 agents per person.

- Many "agents" were simple scripted bots or even humans posting disguised as AI via basic API calls (no verification mechanism existed to distinguish real AI from fakes).

- The platform highlighted risks of "vibe-coding": AI-generated code can introduce subtle but severe security oversights, like exposed keys or missing auth checks.

This incident serves as a stark warning for the emerging agent economy: platforms connecting powerful tools (like OpenClaw-based agents with access to email, files, calendars, Slack, etc.) to untrusted shared environments can turn into massive attack surfaces via prompt injection, credential harvesting, or lateral movement.

New exclusive insight (not yet widely reported): In closed developer Discords and early agent-framework channels, at least three similar "agent-only forums" are already in private beta as of early February 2026, with founders explicitly citing Moltbook's viral growth (and subsequent security wake-up call) as motivation to add stricter sandboxing, agent identity verification via cryptographic proofs, and isolated memory contexts from day one. One unnamed project is experimenting with zero-knowledge proofs to let agents prove autonomy without revealing backend details — potentially the next evolution to avoid Moltbook-style pitfalls.

Stay safe out there — especially if you're running or connecting AI agents to experimental platforms!

Comments

Post a Comment